A new study by researchers at Cornell Tech and the University of Pennsylvania shows freelance writers who are suspected of using AI have worse evaluations and hiring outcomes. Freelancers whose profiles suggested they had East Asian identities were more likely to be suspected of using AI than profiles of white Americans. And men were more likely to be suspected of using AI than women.

Cornell AI News

News Category

Filter by Topic

AI suggestions make writing more generic, Western

Artificial intelligence-based writing assistants are popping up everywhere – from phones to email apps to social media platforms.

But a new study from Cornell – one of the first to show an impact on the user – finds these tools have the potential to function poorly for billions of users in the Global South by generating generic language that makes them sound more like Americans.

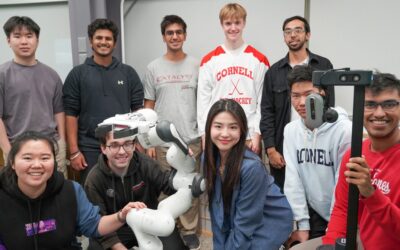

Choudhury wins Navy Young Investigator award to train robots

Sanjiban Choudhury, assistant professor of computer science in the Cornell Ann S. Bowers College of Computing and Information Science, just received a three-year, $750,000 Young Investigator Program award from the Office of Naval Research (ONR) to develop new ways to train robots to perform complex, multistep tasks, such as inspecting and repairing ship engines.

Race-blind college admissions harm diversity without improving quality

Critics of affirmative action in higher education have argued that the policy deprives more qualified students of a spot at a university or college. A new study by Cornell researchers finds that ignoring race leads to an admitted class that is much less diverse, but with similar academic credentials.

AI for Sustainability Visiting Professorship launches at Cornell

The AI for Sustainability (AI4S) Visiting Professorship has launched at Cornell, designed to bring faculty scholars from across the world to the university to tackle pressing global challenges in sustainability through the power of artificial intelligence.

New algorithm picks fairer shortlist when applicants abound

Cornell researchers developed a more equitable method for choosing top candidates from a large applicant pool in cases where insufficient information makes it hard to choose.

While humans still make many high-stakes decisions – like who should get a job, admission to college or a spot in a clinical trial – artificial intelligence (AI) models are increasingly used to narrow down the applicants into a manageable shortlist.

Visiting lecturer will explore expanded vision for AI in research

Polymath scholar Sendhil Mullainathan ’93, a behavioral economist who has combined computational and social sciences to produce pioneering work on health care, poverty and the criminal justice system, will deliver three public lectures at Cornell Nov. 11-13 for the Messenger Lectures series.

Rising star Ben Laufer: Improving accountability and trustworthiness in AI

With artificial intelligence increasingly integrated into our daily lives, one of the most pressing concerns about this emerging technology is ensuring that the new innovations being developed consider their impact on individuals from different backgrounds and communities. The work of researchers like Cornell Tech PhD student Ben Laufer is critical for understanding the social and ethical implications of algorithmic decision-making.