AI + Research

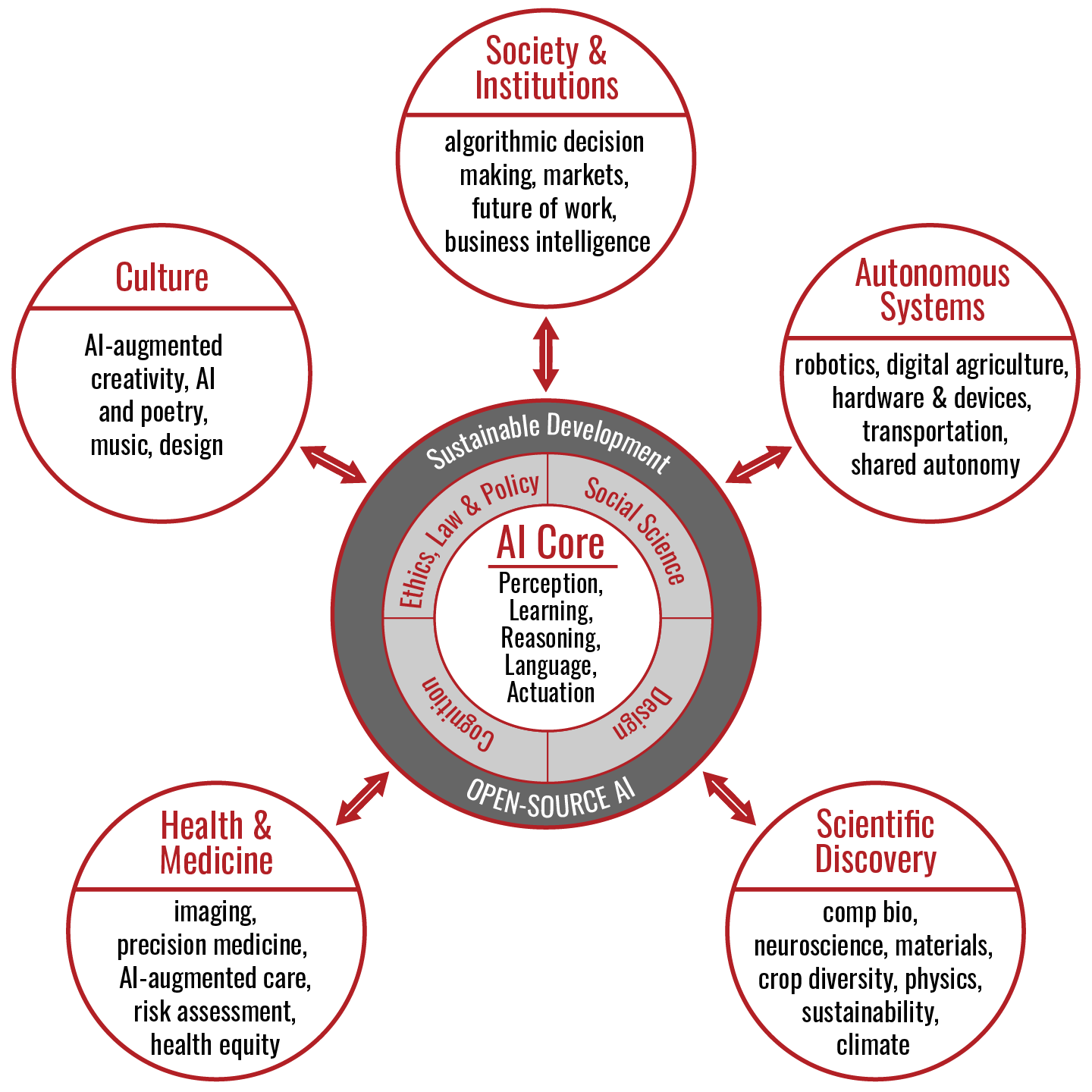

At Cornell, artificial intelligence is both a subject of inquiry — ranging from algorithms and machine learning to ethics and human-AI interaction — and a tool that advances how we conduct research.

AI research at Cornell is deeply interdisciplinary, combining technical innovation with human-centered insight.

Across campuses and departments, researchers are pushing the boundaries of AI and machine learning. Cornell also draws on its strengths in the social sciences to shape, investigate, and apply AI while examining its broader societal impact.

Advancing AI Research

Cornell’s vision for AI tightly integrates the development of algorithmic capabilities with an understanding of the interplay of AI with people, institutions, and real-world applications. This includes supporting research that ranges from core AI methods to human-AI interaction, as well as using AI to accelerate discovery across disciplines.

Cornell is committed to building upon its existing leadership in AI to shape and drive the development and practice of AI for a sustainable future. In particular, we envision AI designed to engage with humans that sustainably serves humanity.

AI Powers New Discoveries

Cornell researchers are developing AI to accelerate discoveries and enable new approaches in sustainable agriculture, nutrition, materials science, precision medicine, and other fields where complex challenges demand new analytical and predictive tools. Collaboration is crucial when exploring AI for scientific discovery, and the initiative fosters new cross-campus collaborations that bring together diverse expertise.

The AI Initiative also supports the coordination of Cornell’s research infrastructure — such as computing resources — and the policy frameworks needed to advance responsible, high-impact AI research. By treating AI as both an area of study and a catalyst for innovation, Cornell empowers researchers to make breakthroughs that benefit science, society, and the world.

Cornell is a founding member of Empire AI, a consortium of 10 New York state institutions organized to promote responsible research and development, create jobs, and unlock AI opportunities focused on public good.

With more than $400 million in public and private investment, Empire AI aims to give researchers, public organizations, and small companies the opportunity to develop AI tools for non-corporate-focused interests in New York.

The consortium created a shared AI computing facility in upstate New York, where Cornell and partners can leverage state-of-the-art computing power to help shape the future of AI.

Cornell Supported AI Tools

Generative AI (GenAI) can accelerate learning, creativity, and research — but must also be used responsibly. Using tools vetted by Cornell isn’t just a technical preference. New GenAI tools undergo a rigorous check for data privacy, security, accessibility, and alignment with university standards. This process helps to safeguard sensitive information, support ethical practice, and strengthen trust across our community.

Additional GenAI tools are currently under review or in early testing. These include Claude.ai, Claude Code, Google Gemini, and ChatGPT Edu. Contact the AI Innovation Hub to inquire about these or other tools, and to get advice about using AI to solve your challenges.

Cornell AI Platform

Cornell's AI Platform is a secure, private "sandbox" for accessing and experimenting with AI tools that comprises two complementary modules: AI Gateway and AI Agent Studio.

AI Gateway allows you to access frontier models such as Google Gemini, Anthropic Claude, OpenAI GPT, and experiment with other models like Mistral, AWS Nova and Grok, all in a secure Cornell environment. It also enables you to power tools like Claude Code and OpenWebUI, and to seamlessly integrate AI into your custom scripts and application development.

AI Agent Studio allows teams to build, manage, and govern AI agents and workflows that run on schedules or respond to specific triggers, while providing observability and auditing tools.

The AI Platform is currently in a pilot phase, and we are actively seeking collaborators to help shape the future of AI at Cornell.

Microsoft Copilot Chat

The universitywide “private” tool provides access to OpenAI GPT models, in addition to OpenAI’s DALL-E vision model. It enables faculty, staff, and students who are 18 years of age or older to experiment with GenAI text, image, and coding tools without storing the person’s login and chat data or using that data to train the large language models. However, it does use Microsoft’s public search engine where privacy is limited. For this reason, only enter low-risk data — information that the university has made available or published for the explicit use of the general public. If you need a tool that enables you to use higher levels of data, see Ideas, Requests, and Oversight of AI at Cornell for possible next steps.

Adobe Firefly

(Available with an Adobe license)

Firefly allows you to generate images from text, then manipulate and edit them. Coming soon: generative voice and video content.

Zoom AI Companion

The Zoom AI Companion gives hosts and participants shareable meeting summaries and next-steps lists, “highlight reels” in recordings, catch-up options for people joining a meeting late, and more. Currently, the university is evaluating security and privacy considerations for these new features.

Guidelines and Best Practices

Cornell’s guidelines seek to balance the exciting new possibilities offered by these tools with awareness of their limitations and the need for rigorous attention to accuracy, intellectual property, security, privacy, and ethical issues. These guidelines are upheld by existing university policies.

When exploring AI tools, it is important to make informed choices about which tools we use and whether they provide privacy and protection of an individual’s personal information and institutional data. Free AI tools that are not offered by Cornell do not provide any material protection of data and should not be used to share or process academic or administrative information.

Accountability

You are accountable for your work, regardless of the tools you use to produce it. When using GenAI tools, always verify the information, check for errors and biases, and exercise caution to avoid copyright infringement. GenAI excels at applying predictions and patterns to create new content, but since it cannot understand what it produces, the results are sometimes misleading, outdated, or false.

Confidentiality and Privacy

If you are using public GenAI tools, you cannot enter any Cornell information — or another person’s information — that is confidential, proprietary, subject to federal or state regulations, or otherwise considered sensitive or restricted. Any information you provide to public GenAI tools is considered public and may be stored and used by anyone else.

As noted in the University Privacy Statement, Cornell strives to honor the Privacy Principles: Notice, Choice, Accountability for Onward Transfer, Security, Data Integrity and Purpose Limitation, Access, and Recourse.

Use for Research

The widespread availability of GenAI tools offers new opportunities for creativity and efficiency and, as with any new tool, depends on humans for responsible and ethical deployment in research and society. In Fall 2023, Cornell’s Taskforce on the Use of GenAI in Research produced “The GenAI in Academic Research: Perspectives and Cultural Norms” report covering the various stages of the research process in which many faculty, staff, and students participate daily. The report provides guidance for thoughtful use of GenAI in research, identifying opportunities as well as risks and duties in both the development and use of these tools in academic research that aspires to have positive societal impact.

Read the Cornell Chronicle story about the report: Task force offers guidance to researchers on use of AI

Read the full committee report: Web: Generative AI in Academic Research: Perspectives and Cultural Norms; PDF: Generative AI in Academic Research: Perspectives and Cultural Norms

Tools and Resources

Whether you’re looking to refine a prompt, explore new use cases, or talk through an idea with an expert, these resources connect you with the guidance and hands‑on support you need.

AI Innovation Hub

The AI Innovation Hub is a collaborative space for the Cornell community to explore and experiment with Generative AI. Here, faculty, staff, and students can build and test practical AI tools and applications that improve university operations and support Cornell’s mission.

AI Exploration Series

AI Exploration Series (via Zoom)

Join AI Assistant Program Director Ayham Boucher for the debut of a bi-weekly series for Cornell students, faculty, and staff who want to know more about all things AI. The 30-minute workshop is held over Zoom at 2 p.m. ET.

Effective Prompts

Crafting an effective prompt is not the same as searching the web. Here are some tools to improve your prompting skills.

GenAI at Cornell on Teams

Community Learning

Learn how others in the Cornell Community are leveraging AI tools in creative ways. We highlight interesting stories, lessons learned, and best practices shared by our AI experts. It’s a great opportunity to share your learnings with the community too.

Tools Under Review

Cornell is actively assessing additional GenAI tools to determine which meet our standards for privacy, security, accessibility, and responsible use. Each platform undergoes careful review to ensure it protects sensitive information, supports academic integrity, and aligns with the university’s values.

Contact the AI Innovation Hub to inquire about these or other tools, and to get advice about using AI to solve your challenges.

Anthropic Claude

Anthropic Claude tools include Claude.ai, Claude Code, and Claude Cowork. Cornell is working with Anthropic to shape an offering tailored to higher education, with a focus on meeting the university’s data privacy and security requirements.

OpenAI ChatGPT EDU

We’re working with OpenAI to onboard ChatGPT EDU as a private workspace for Cornell University. We will be sharing more information as this offering becomes available.

Github Copilot

GitHub Copilot is an AI-powered pair programmer developed by GitHub (owned by Microsoft) in collaboration with OpenAI. It assists developers by providing autocompletions and code suggestions while they write code.

Google Gemini

AI integration with Google Workspace, including Deep Research and Gems. The university is evaluating the privacy, security, licensing, potential cost impacts, and responsible use.

Initiatives and Institutes

Cornell’s many initiatives and institutes are driving the development and ethical advancement of artificial intelligence, reflecting the university’s commitment to leading in this rapidly evolving field.